I have found a fine introduction to Heaviside’s methods in Spiegel’s “Applied Differential Equations”, 3rd ed, 1981, Prentice Hall. To be specific, pages 204-211. I can’t very well say, “Don’t buy the book just for this” – because that’s exactly what I did!

Let me emphasize that, as far as I know, Heaviside’s methods are now of primarily historical interest. I would not say that Laplace transforms make Heaviside’s methods rigorous – but that Laplace transforms provide a rigorous alternative which, like Heaviside’s, lets us do algebra instead of calculus.

Like Laplace transforms, the quick use of Heaviside’s methods takes advantage of shifting properties, linearity, and tables of known results to speed up calculations. I’m not going to take them that far. With Mathematica® or another symbolic system, I see no need to go beyond the introduction to Heaviside’s methods. What I wanted to see was: just what was Heaviside’s fundamental idea? It turns out that his fundamental idea suffices, given other tools available today.

Of course – as we have seen and as we’ll see below – Mathematica can quickly solve the differential equations to which Heaviside’s methods apply (linear, with constant coefficients). We don’t need Heaviside…

…but it turns out there’s at least one question Heaviside’s methods can answer very, very quickly: find a particular solution (rather than the general solution). I don’t know that I will ever use Heaviside for that, but I know that I could.

One last thing. For my present purposes, it suffices that I will find solutions to a differential equation – and I will confirm that my answers are solutions… but I’m not going to try to prove anything at all. I don’t know what the limitations of this method are – instead of confirming that the differential equation under consideration satisfies some set of conditions, I’ll simply confirm that the answer works.

With that, let me roll up my sleeves and show this to you.

Consider the equation

y'(x) = f(x).

Write it using the diiferential operator D:

Dy(x) = f(x)

and then formally invert that:

.

For reasons that will become clear, I prefer the final form, with . This is the opposite of what we do with matrices. The inverse of a matrix A is always written

, and never written as 1/A…. The reason for that is the non-commutativity of matrix multiplication. In general,

,

so B/A is not well-defined: it could have two distinct interpretations. But we will see that is a plausible notation for the inverse differentiation operator.

Now here’s the key to

:

we know what is. Let F(x) be an indefinite integral of f(x)

and then

y(x) = F(x) + C.

So maybe we could we say that

.

OK, now let’s try something just a little more complicated: a general linear first order differential equation with constant coefficients. (You’ll see why I chose -p rather than p.)

y'(x) – p y(x) = f(x).

We rewrite that as

(D-p) y(x) = f(x)

and then

.

We know the solution to that, too. This time we let

and then

.

It looks like we can translate as

. And what I’m going to do is show that it works. What I’m not going to do is give you a theorem specifying the conditions under which it works. After all, having once obtained a putative solution, we can verify that it a solution. (We cannot always be sure that we have found all solutions.)

Incidentally, when p = 0 we get the previous result, for 1/D.

Maybe I should remind us where that solution came from. is an integrating factor for this first order differential equation. That is, if we multiply the equation

y'(x) – p y(x) = f(x)

by ,

then the left hand side is the derivative of a product, and we have

.

Now integrate both sides, getting

and then

.

That trick works more generally. If p were a function p(x), then the integrating factor would be …. But I only need a constant -p, and -px is its indefinite integral. Someday I might play with a function p(x), but I suspect that I can only handle a constant p. If we have a function p(x) instead of a constant p, then our differentiation operators D – p(x) will not commute…. Is that a fatal flaw?

Anyway we have a plausible definition of … and it does reduce to our earlier plausible definition of

.

What about multiple factors? Suppose we had

(D-1)(D+2) y(x) = f(x).

That is, since we can expand those two factors as

what we have is the differential equation

y”(x) + y'(x) – 2 y(x) = f(x).

Can we simply write

? And then solve one step at a time? That is, first compute

and then

?

Does it matter if we write

?

Yes we can do it one step at a time; no it doesn’t matter what order we take.

It get’s better. Algebraically, we could expand an expression like

by partial fractions:

Instead of a sequential calculation, can we do two separate ones and add them?

Yes, we can.

The only caveat is that if we have a repeated factor, say,

then that is actually the partial fraction expansion – we have no choice but to proceed sequentially, because I have no idea what – equivalently

– is.

Let me remark that I was surprised to see this work. Yes, I know that I can rewrite a second order equation as a pair of first order equations – but I wasn’t expecting this form of it!

OK, we’re just about ready to dive in. Let me define a function

The first entry is our constant p from D-p… the second entry is the RHS f of the differential equation… the third entry is the independent variable… and the fourth entry is the constant of integration.

Let me emphasize that if I have , I input p. Maybe you would have done it differently, but I think of p as the root or zero of (D-p).

Actually, before I jump in, let me summarize this introduction to Heaviside’s operator calculus.

First, we define the inverse of D-p as the integral

Second, we believe that if we have a composite differential operator such as

then we can apply them consecutively to get the inverse of the composite.

Third, if p and q are distinct, then we have an alternative available to us: expand the composite operator using partial fractions, invert each piece, and then add them up.

Now let’s go.

Example 1

Let’s start with the equation I solved in the previous post. Here’s the solution, a0, using DSolve:

Now let’s use Heaviside’s operator calculus. The differential equation translates to

,

so one way or another, we factor (and save the starting point):

First, let’s compute the inverse sequentially. We are saying that

then

and then

.

That is, I invert … call it g(x) for discussion purposes… and then I invert

. In practice, I didn’t name it… I just apply the second inverse to the output (%) of the first command.

The two answers we’ve gotten differ only in the definitions of the constants. In fact, I think the only difference is c1 = -2 C[2] (oh, and c2 = C[1]). Let me make those substitutions and subtract the two answers:

Note that even if I had correctly associated c1 with C[2], i.e. the constant associated with , if I don’t get the sign and the factor of 2, then

… it would look like my answers different considerably. The point is that it’s not always worthwhile to take the time to match the answers; instead, just verify that each does in fact satisfy the differential equation.

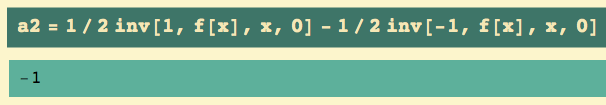

Alternatively, we can split into partial fractions (note the factors of 1/2 and one negative sign)…

… and to do this in one step I want

Note that both function calls used f(x). This was not a sequential process, but a parallel one.

In practice, I would simply make one call:

Again, the difference in answers is illusory: just change the definitions of the constants. What is common, however, is the particular solution y = -1.

Let’s confirm that we have three solutions. Yes, I’m certain everything is fine… but let’s check anyway.

Looking at the equation

I can write

That is, if I substitute a0 and a0′ for y[x] and y'[x], I get the right hand side of the differential equation.

The sequential answer, a1?

And the parallel answer a2?

(Hmm. I almost certainly had a %//Simplify when I actually executed that command, then removed it and forget to re-execute it. Oh, well.)

One last thing.

If we had set the constants of integration to zero, we would have obtained the particular solution (y = -1) to the inhomogeneous equation. Sequentially:

In parallel:

Of course, DSolve does everything for us… but I like knowing that there’s a quick way to get a particular solution to the inhomogeneous equation.

Let me also point out that having both the usual form of the differential equation

it is nice to be able to confirm that we got the same answers. I don’t know about you, but I have an annoying tendency to mistype differential equations. For some kinds of them, I now have a way of checking my inputs, because I get to type in the equation in two very different ways.

Before we move on to another example, let me emphasize that the two key computations – Heaviside’s approach – were for answers a1 and a2; everything else was auxiliary.

Example 2

Let’s try another example. This comes from Spiegel (top of the post).

First, using DSolve:

Now for Heaviside’s approach, get the factors:

Or I could have asked for the partial fraction expansion:

Proceeding sequentially, we use the first form, so our factors are D+1, D-1, and D:

… where the is applied to f… then

is applied to the result (%)… and then

is applied to that result (again %).

To work in parallel, we use the second form:

Let me be completely pedestrian again and verify that we have three solutions. I recall the differential equation…

… and then I substitute a0 and its derivatives for y and its derivatives. Do I get the right hand side?

Yes. DSolve got a solution. (That’s always good to know.)

For the sequential solution a1:

We’re good again.

For the parallel solution a2:

Example 3

(This comes from Kaj L. Nielsen “Differential Equations”, Barnes & Noble College Outline Series, 1962.)

Here is an example for which only the sequential solution is possible, because we have a repeated factor. In addition, I’m only going to look for a particular solution to the inhomogeneous equation.

First, set the right hand side, f(x):

Next, get the differential equation! (But save the factored form.)

That’s not all that bad to write out:

Let DSolve solve it – but then set all C[i] = 0. Oh, note that I wrote y instead of y[x] in DSolve. (I’m about to show you another facet of Mathematica.)

I have deliberately asked for the solution s1 as a pure function (from seeking y instead of y[x]), because it means that the following works:

That is, we have just verified that s1 is a solution of the differential equation. If the solution had not been a pure function, the substitution would have applied to y[x], but not to its derivatives. (And for no good reason, I’ll show you that in the next example.)

Recall the factored form:

Now just write the out 5 inverses. Since I only want a solution to the inhomogeneous equation, I set each constant of integration (the last parameter) to zero. The first inverse is applied to f(x), but the others are applied to the previous result (%):

That’s a much simpler function than the previous answer. Is it right? Here’s the differential equation:

Now I use a1 instead of y[x] etc.

Yes. a1 is a solution. And we already saw that a0 was.

Example 4

Let me do one last example to show you how to handle complex roots, and especially ones where Mathematica needs a little nudge. Although the example comes from Spiegel, he used additional results to cope with the complex roots. My point is that we don’t need to add any more techniques.

I set the right and side f(x):

Get the factors:

So much for that idea! OK, ask for the zeroes:

My experience is that isn’t enough: we need the real and imaginary parts – and that’s what //ComplexExpand got me. (That’s the nudge Mathematica needs.) But once we have explicit values, we can proceed. Here’s the sequential solution – again, I choose to find only the particular solution to the inhomogeneous equation, so my fourth parameter is 0 in both cases:

Is that really a solution? Here’s the given form:

so a1 is a solution.

What would DSolve have gotten? I write the ODE:

While I’m at it, let me show you the failure of s1 in this form:

He has substituted for y[x], but not for any of the derivatives. That’s what a pure function got me in the previous example.

Since I only want a particular solution to the inhomogeneous equation, let me set all C[i] = 0, and get the answer in a form suitable for comparison.

and is that the same as I got using Heaviside?

Yes.

So.

If we can factor the differential equation, we can use Heaviside’s method sequentially, even for complex roots. If all the factors are distinct, we have a parallel alternative, using the partial fraction decomposition. Note that the roots must be constants, not functions.

We can get a particular solution to the inhomogeneous equation by setting all the constants of integration to zero.

We also saw that if we want to check DSolve easily, we want to ask for its solution as a pure function y rather than as y[x].

August 27, 2012 at 7:25 pm

I’m interested. I don’t recall having ever heard of this method before but it certainly looks fun. (Are there any methods of turning differential equations into polynomials that aren’t fun?)

February 12, 2019 at 11:24 am

also here are and other observed prof dr mircea orasanu very important of Heaviside function and distribution theory